a16z "Three Major Predictions for AI Agents in 2026": The Disappearance of Input Boxes, Agent-First Usage, and the Rise of Voice Agents

a16z predicts that AI is evolving from a passive tool to an active agent.

Written by: Long Yue

Source: Wallstreetcn

At the recent "Big Ideas for 2026" online seminar hosted by the well-known venture capital firm Andreessen Horowitz (A16z), its partner team outlined a clear blueprint for the evolution of AI technology: artificial intelligence is evolving from a chat tool that waits for instructions to an "agent" capable of proactively executing tasks.

At the same time, they put forward three major "guesses" that could reshape industries: user interface interactions will shift from "prompting" to "executing," product design will move from "human-centered" to "agent-first," and voice agents will transition from technical demos to large-scale deployment.

Guess One: The disappearance of the input box.

Marc Andrusko, partner of the a16z AI application investment team, boldly predicts, "By 2026, the input box as the main user interface for AI applications will disappear." He believes that the next generation of AI applications will no longer require users to input instructions tediously, but will instead observe user behavior, proactively intervene, and provide actions for review.

This shift marks a huge leap in the commercial value of AI. Andrusko points out, "We used to focus on the $300-400 billion in global software spending each year, but now we're excited about the $13 trillion in labor spending in the US alone. This expands the market opportunity for software by about 30 times."

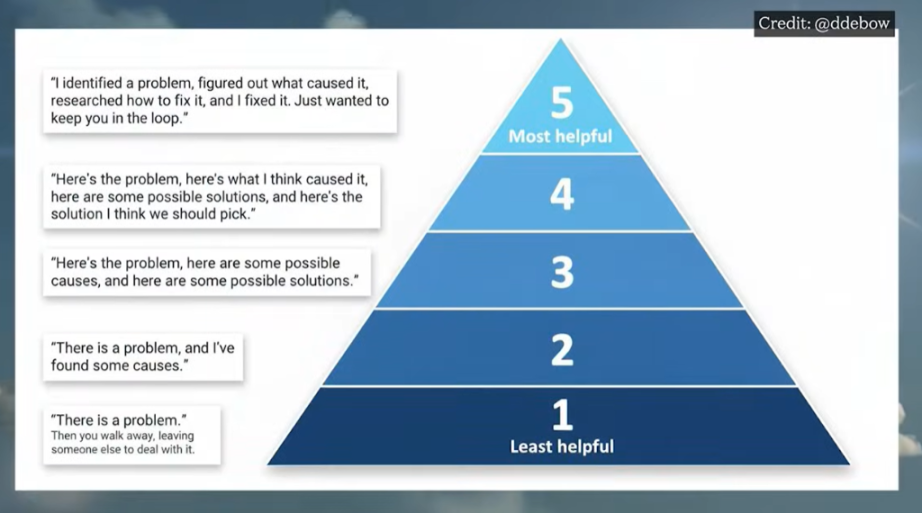

He compares future AI agents to the top "S-level employees": "The most proactive employees identify problems, diagnose root causes, implement solutions, and only then come to you and say: 'Please approve the solution I found.' This is the future of AI applications."

Guess Two: "Agent-first" and machine legibility.

Stephanie Zhang, a16z growth investment partner, proposed a disruptive design logic: software will no longer be designed for the human eye. She points out: "What is important for human consumption may no longer be important for agent consumption. The new optimization direction is not visual hierarchy, but machine legibility."

In Stephanie's view, the "5W1H" principle or exquisite UI interfaces that we optimized for human attention in the past will be reconstructed in the era of agents. She predicts: "We may see a large amount of ultra-personalized, high-frequency content generated for agent interests, which is like keyword stuffing in the agent era."

This shift will profoundly affect all aspects from content creation to software design.

Guess Three: The rise of voice agents.

Meanwhile, Olivia Moore, partner of the a16z AI application investment team, observed, "AI voice agents are beginning to gain a foothold." She stated that voice agents have evolved from a science fiction concept to systems that real enterprises are purchasing and deploying at scale. Especially in healthcare, banking and finance, and recruitment, voice agents are favored for their high reliability, strong compliance, and ability to solve labor shortages.

She shared an interesting finding: "In banking and financial services, voice AI actually performs better, because humans are very good at violating compliance regulations, while voice AI can execute them precisely every time."

Moore emphasized, "AI won't take your job, but a person using AI will." This suggests that traditional call centers and BPO industries will undergo profound changes, and service providers who can use AI technology to offer lower prices or greater processing capacity will gain a competitive advantage.

The core points of this online seminar:

- The end of UI: The era of the prompt box as the main interaction interface is coming to an end, and AI will shift from "passive response" to "active observation and intervention."

- 30-fold market growth: AI's target market is shifting from $400 billion in software spending to $13 trillion in labor spending, fundamentally changing the business logic.

- S-level employee model: The ideal AI should be like a highly proactive employee: discover problems, diagnose causes, provide solutions and execute, leaving only the final step for human confirmation.

- Machine legibility: Software design goals are shifting from "human-first" to "agent-first," and visual hierarchy UI will no longer be core.

- Alienation of content creation: Brand competition will shift from attracting human attention to "Generation Engine Optimization" (GEO), and there may even be a large amount of "high-frequency content" generated specifically for AI crawling.

- Compliance advantages of voice AI: In high-threshold industries such as finance, voice AI outperforms humans because it can comply 100% with regulations and its actions are traceable.

- Entry into healthcare and government services: Voice agents are solving the high turnover problem in healthcare and are expected to address public service pain points such as 911 calls and DMV in the future.

- Industrialization of voice AI: Voice AI is developing into a complete industry rather than a single market, and there will be winners at every layer of the value chain, from foundational models to platform-level applications, all with huge opportunities.

- From tool to "AI employee": AI is no longer a simple auxiliary tool, but a digital employee capable of independently handling complete business cycles.

Full transcript of the a16z AI team seminar (translated by AI tools):

Director 00:00 Welcome to "Big Ideas for 2026." We will hear from Marc Andrusko about the evolution of AI user interfaces and the fundamental changes in how we interact with intelligent systems. Stephanie Zhang will discuss the significance of designing for agents rather than humans, a shift that is reshaping product development. Olivia Moore will share her views on the rise of AI voice agents and their growing role in our daily lives. These are not just predictions; they are insights from people working directly with founders and companies building the future world.

Marc Andrusko 00:31 I'm Marc Andrusko, a partner on our AI application investment team. My big idea for 2026 is the disappearance of the input box as the main user interface for AI applications. The next wave of applications will require far fewer prompts. They will observe what you are doing and proactively intervene, providing action plans for your review.

Marc Andrusko 00:49 The opportunity we are attacking used to be the $300-400 billion in global software spending each year. Now what excites us is the $13 trillion in labor spending in the US alone. This expands the market opportunity or total addressable market (TAM) for software by about 30 times. If you start from here and then think, okay, if we all want this software to work for us, ideally, its working ability should be at least as good as humans, or even better, right? So I like to think, hmm, what do the best employees do? What do the best human employees do? I've been talking a lot recently about a chart circulating on Twitter. It's a pyramid about five types of employees and why those with the most agency are the best. If you start at the bottom of the pyramid, those are the people who discover a problem and then come to you for help, asking what to do. This is the employee with the lowest agency.

But if you go to S-level, that is, the employee with the highest agency you can have, they will discover a problem, do the necessary research to diagnose the root cause, study multiple possible solutions, implement one of them, and then keep you informed, or come to you at the last moment and say, "Please approve the solution I found." I think this is what the future of AI applications looks like. And I think this is what everyone wants. This is what we are all working towards. So I am very confident that we are almost there. I think large language models (LLMs) are continuously getting better, faster, and cheaper, and I think to some extent, user behavior will still require human involvement at the final stage to approve things, especially in high-risk scenarios. But I think the models are fully capable of reaching a level where they can propose very smart suggestions on your behalf, and you basically just need to click confirm.

Marc Andrusko 02:27 As you know, I am very fascinated by the concept of AI-native CRM. I think this is a perfect example of what these proactive applications might look like. In today's world, a salesperson might open their CRM, browse all open opportunities, check the day's calendar, and then think, okay, what actions can I take now to have the greatest impact on my sales funnel and closing ability. And for the CRM of the future, your AI agent or AI CRM should be doing all these things for you continuously, not only identifying the most obvious opportunities in your channel, but also going through your emails from the past two years, digging out, you know, this was once a promising lead, but you let it go cold. Maybe we should send them this email and bring them back into your process, right? So I think the opportunities are endless in drafting emails, organizing calendars, reviewing old call records, and so on.

Marc Andrusko 03:22 Ordinary users will still want that final mile of approval. In almost 100% of cases, they will want the human part of "human in the loop" to be the final decision maker. That's fine.

Marc Andrusko 03:33 I think this is the way it naturally evolves. I can imagine a world where power users will put in a lot of extra effort to train whatever AI application they use to know as much as possible about their behavior and work style. These applications will use larger context windows and the memory functions already integrated into many large language models, allowing power users to truly trust the application to complete 99.9% or even 100% of the work. They will be proud of the number of tasks that can be completed without human approval.

Stephanie Zhang 04:09 Hi, my name is Stephanie Zhang, and I'm an investment partner on the a16z growth investment team. My big idea for 2026 is: create for agents, not for humans. One thing I'm very excited about for 2026 is that people will have to start changing the way they create. This covers everything from content creation to application design. People are starting to interact with systems like the web or applications through agents as intermediaries. What is important for human consumption will be different in importance for agent consumption.

Stephanie Zhang 04:41 When I was in high school, I took a journalism class. In journalism class, we learned the importance of starting the first paragraph of a news article with 5W and 1H (who, when, where, what, why, how), and starting feature stories with a lead. Why? To attract human attention, as humans might miss deep and insightful statements buried deep in an H5 page, but agents won't.

Stephanie Zhang 05:02 For years, we've optimized for predictable human behavior. Do you want to be one of the first search results returned by Google? Do you want to be one of the first products listed on Amazon? This optimization applies not only to the web but also to how we design software. Applications are designed for human eyes and clicks. Designers optimize for good user interfaces (UI) and intuitive flows. But as agent usage grows, visual design will become less important for overall understanding. Previously, when an incident occurred, engineers would go into their Grafana dashboards and try to piece together what happened. Now, AI SREs (site reliability engineers) receive telemetry data. They analyze this data and report hypotheses and insights directly in Slack for humans to read.

Previously, sales teams had to click and browse CRMs like Salesforce to gather information. Now, agents fetch this data and summarize insights for them. We are no longer designing for humans, but for agents. The new optimization standard is not visual hierarchy, but machine legibility. This will change how we create and the tools we use. What are agents looking for? That's a question we don't know the answer to. But what we do know is that agents are much better than humans at reading all the text in an article, while humans may only read the first few paragraphs. There are many tools on the market, used by different organizations to ensure that when consumers prompt ChatGPT and ask for the best company credit card or the best shoes, they can appear. So there are many tools on the market that we call SEO (note: should be SEO or similar concept, here it's a transliteration), which people are using, but everyone is asking one question: What do AI agents want to see?

Stephanie Zhang 06:43 I like this question about when humans might completely exit the loop. We've already seen this happening in some cases. Our portfolio company Dekagon is already autonomously answering questions for many of their clients. But in other cases, such as security operations or incident resolution, we usually see more humans in the loop, with AI agents first trying to figure out the problem, analyzing, and providing humans with different potential scenarios. These tend to be cases with greater responsibility and more complex analysis, where we see humans staying in the loop. And until the models and technology reach extremely high accuracy, they may stay in the loop longer.

Stephanie Zhang 07:33 I don't know if agents will watch Instagram Reels. It's really interesting, at least technically, that optimizing for machine legibility, optimizing for insight, and optimizing for relevance is really important, especially compared to the past, which focused more on attracting people and grabbing attention in flashy ways. What we've already seen are cases of massive, ultra-personalized content; maybe you're not creating an extremely relevant, highly insightful article, but creating a lot of low-quality content targeting different things you think agents might want to see. This is almost like the keywords of the agent era, where the cost of content creation approaches zero, and creating a lot of content becomes very easy. This is the potential risk of generating a large amount of content to try to attract agent attention.

Olivia Moore 08:48 I'm Olivia Moore, a partner on our AI application investment team. My big idea for 2026 is that AI voice agents will begin to gain a foothold. In 2025, we see voice agents breaking through from something that seemed like science fiction to systems that real enterprises are purchasing and deploying at scale. I'm excited to see voice agent platforms expand, working across platforms and modalities to handle complete tasks, bringing us closer to the vision of true AI employees. We've already seen enterprise clients in almost every vertical testing voice agents, if not already deploying them at considerable scale.

Olivia Moore 09:25 Healthcare may be the biggest one here. We're seeing voice agents appear in almost every part of the healthcare stack, including calls to insurance companies, pharmacies, providers, and perhaps more surprisingly, patient-facing calls. This could be basic functions like scheduling and reminders, but also more sensitive calls, such as post-operative follow-up calls and even initial psychiatric intake calls, all handled by voice AI. Honestly, I think one of the main drivers here is the high turnover and hiring difficulties in the healthcare industry, making voice agents that can perform tasks with a certain reliability a pretty good solution. Another similar category is banking and financial services. You might think there's too much compliance and regulation for voice AI to operate there. But it turns out this is an area where voice AI actually performs better, because humans are very good at violating compliance and regulations, while voice AI can do it every time. And importantly, you can track the performance of voice AI over time. Finally, another area where voice technology is making breakthroughs is recruitment. This covers everything from frontline retail jobs to entry-level engineering positions and even mid-level consultant roles. With voice AI, you can create an experience for candidates where they can interview immediately at any time that suits them, and then they are sent into the rest of the manual recruitment process.

Olivia Moore 10:46 As the underlying models get better, we've seen huge improvements in accuracy and latency this year. In fact, in some cases, I've heard of voice agent companies deliberately slowing down their agents or introducing background noise to make them sound more human. When it comes to BPO (business process outsourcing) and call centers, I think some will experience a smoother transition, while others may face a steeper cliff in the face of threats from AI, especially voice AI. It's a bit like people say, AI won't take your job, but a person using AI will.

Olivia Moore 11:16 What we're seeing is that many end customers may still just want to buy solutions, not technology they have to implement themselves. So in the near to medium term, they may still use call centers or BPOs. But they may choose one that can offer a lower price or handle more volume because it uses AI. Interestingly, in some regions, on a per-permanent-employee basis, humans are actually still cheaper than top voice AI. So as the models get better, whether costs will come down there and whether call centers in those markets will face greater threats than now will be interesting to watch.

Olivia Moore 11:50 AI is actually very good at multilingual conversations and heavy accents. Many times, when I'm in a meeting, I might miss a word or phrase, and then I'll check my (Granola) meeting notes, and it's recorded perfectly. So I think this is a great example of what most ASR or speech-to-text providers can do.

Olivia Moore 12:08 There are now several use cases I hope to see more of next year, anything related to government. We are investors in Prepared 911, and if you can handle 911 calls—they handle non-emergency calls—but if you can handle that with voice AI, you should also be able to handle DMV calls and any other government-related calls, which are now very frustrating for consumers and equally frustrating for employees on the other end of the line.

Olivia Moore 12:32 I'd also love to see more consumer-grade voice AI. So far, it's mainly B2B (business-to-business), because it's so obvious to use much cheaper AI to replace or supplement humans on the phone. In the consumer voice space, one category I'm excited about is around broader health and wellness. We've already seen voice companions emerge in assisted living facilities and nursing homes, both as companions for residents and to track different health indicators over time. We see voice AI as an industry rather than a market, and to us, that means there will be winners at every layer of the technology stack. If you're interested in voice AI or want to start a business in voice AI, I suggest you check out those models. There are many great platforms, like 11 Labs, where you can test creating your own voice and your own voice agent. You'll get a good sense of what's possible and what will happen in the future.

Disclaimer: The content of this article solely reflects the author's opinion and does not represent the platform in any capacity. This article is not intended to serve as a reference for making investment decisions.

You may also like

IMF Highlights Strong Growth and Ongoing Bitcoin Discussions in El Salvador

Hyperliquid personally reconciles accounts; behind the perfect PR is a fundamental crackdown on competitors.

Solana Falls 39%: Officially Worst Quarter of 2025

Leading ETH Treasury Firm Reaches Tremendous Milestone